Have you ever looked up to a starry night sky, seen a shooting star and made a wish? I am sure you have. Well, look again and look carefully. Are you sure it is a shooting star, or could it be something else? Can you tell for sure? Well, maybe if your wish comes true, then you can tell with certainty that it was a shooting star, no?

This Fall semester at New College of Florida, 7 students* in the Applied Data Science master’s program joined the world-wide effort in analyzing data collected from cameras watching the night skies. Known as the CAMS project (Cameras for Allsky Meteor Surveillance), a NASA-sponsored initiative with the SETI Institute and under the leadership of Dr. Peter Jenniskens, the effort is aimed at detecting meteors in the night sky through the use of data collected from multiple cameras watching night-time sky activity. Since 2011, 19 locations worldwide have been launched to collect such data that are intended to help astronomers and researchers to detect meteors, calculate their orbit trajectories and trace their origins. Many researchers and data scientists have tackled to date the challenging task of analyzing these large datasets with the help of data science and machine learning techniques. But this process wasn’t always easy, in fact scientists had to travel to the camera locations, grab the video data, come back to their offices and spend hours in almost uneventful darkness watching the video footage to recognize meteors. in 2017 a team from Frontier Development Lab (FDL) automated this process and built an initial data processing pipeline. Since then the CAMS AI pipeline has been improved yearly, leading to 6x growth and now in 2021 a team from SpaceML improved the AI models precision and recall and built a portal to view meteor data from all over the world (more information here).

A Hands-on Capstone Project Course

We took up this challenge as part of our Practical Data Science course offered at New College every Fall semester in partnership with companies and organizations. This is a major hands-on activity in our master’s program after all foundational coursework is completed, that aims to serve one main purpose: connect our students with the industry to provide them hands-on experience in applying data science to real-world problems. Companies and organizations present their problems along with matching datasets and seek help from student teams that will develop solutions, models, algorithms, and applications that address these problems. Under my supervision as the course instructor, we start the process as early as the Spring semester by soliciting projects and datasets from companies, followed by compiling and presenting them to the cohort who will enroll in the course, overseeing the communications that would lead to student-team and team-project assignments, and finally launching each project the first week of the Fall semester. In Fall 2021, 14 project ideas were proposed by 4 organizations, one of which was the SpaceML led CAMS project. Two of the five teams favored the CAMS project, and each developed a complete data science pipeline over the course of the 13-week semester.

Our connection with the CAMS project came through Ms. Siddha Ganju, a computer scientist and Artificial Intelligence expert who automated the network and process that produces and prepares the dataset related to night-sky observations. She also developed a Machine Learning algorithm to classify night-sky activity as meteor or non-meteor. This was a great starting point for the teams because a) they had benchmark results and findings to compare against, and b) Ms. Ganju provided an invaluable domain expertise and guidance to the teams. There is a wealth of experience offered by Ms. Ganju and the rest of the FDL team that provided the information to make sense of data attributes, the deep learning model that was developed, the actual Python code and the findings obtained. Throughout the semester, we met twice a week in class and our project sponsor, Ms. Ganju was also present in many of these meetings.

What Did We Find Out?

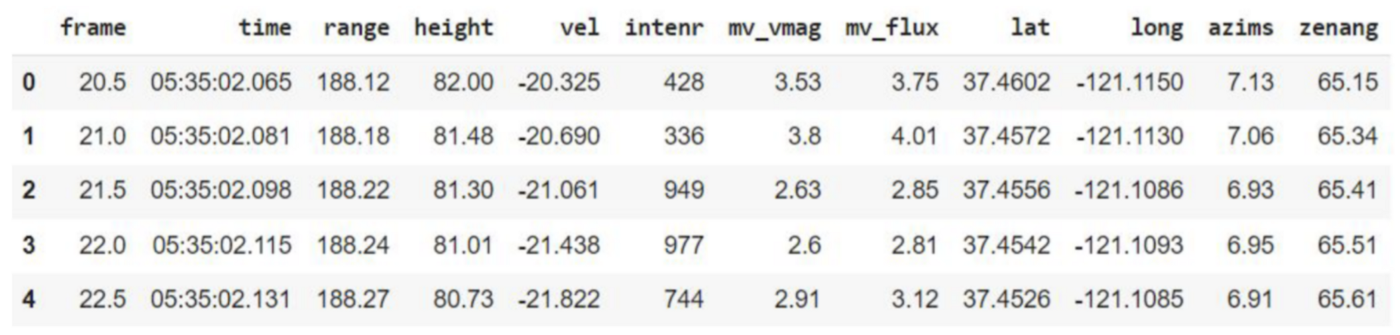

The teams started out with a large dataset that included a single row for each time-stamped geo-referenced night-sky observation acquired from one or more cameras (see Table 1 for five rows of data). Interestingly, this came in a different format from one would naturally expect, i.e., a night-sky camera footage or image that shows the illumination in the sky. Instead, it included numeric data extracted through a process that corresponded to coordinates, altitude, brightness, and velocity of the object observed in the sky.

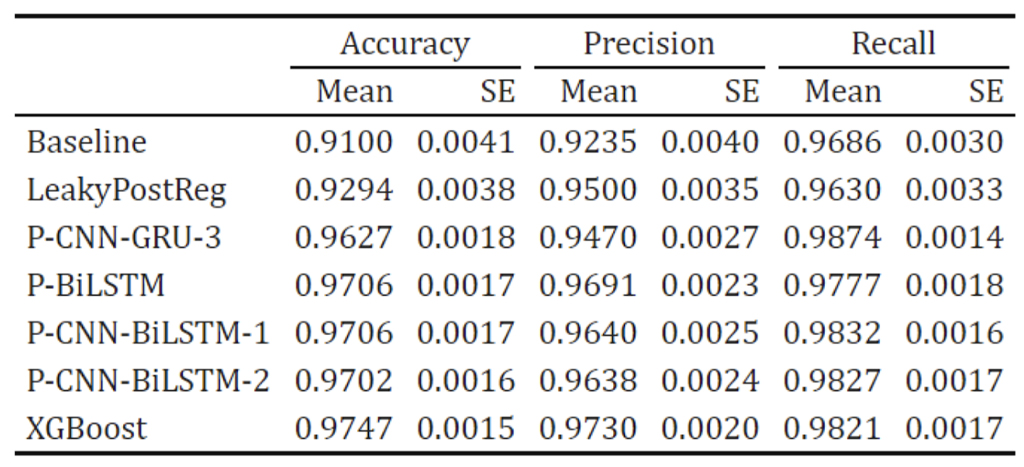

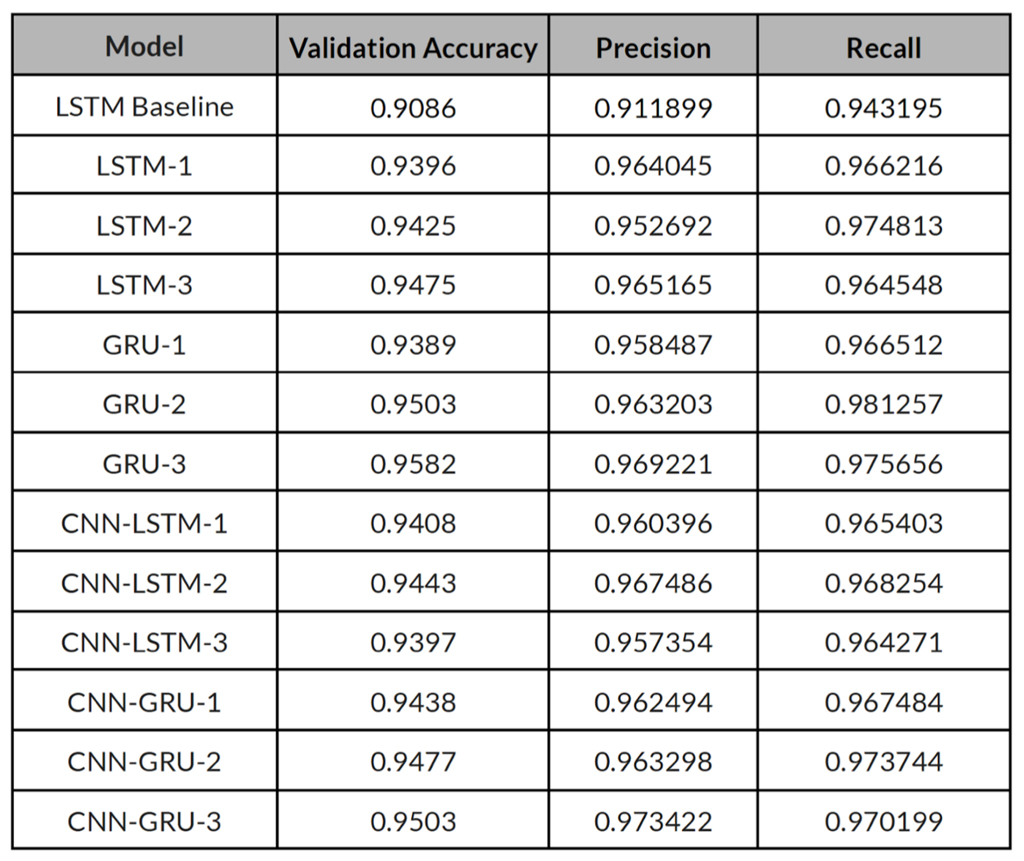

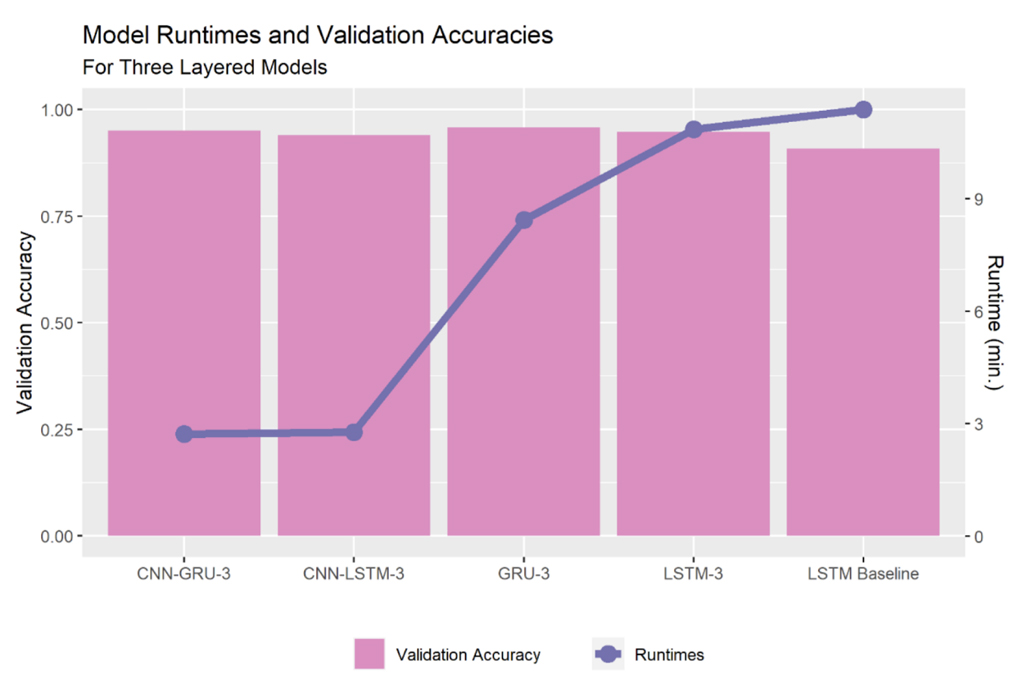

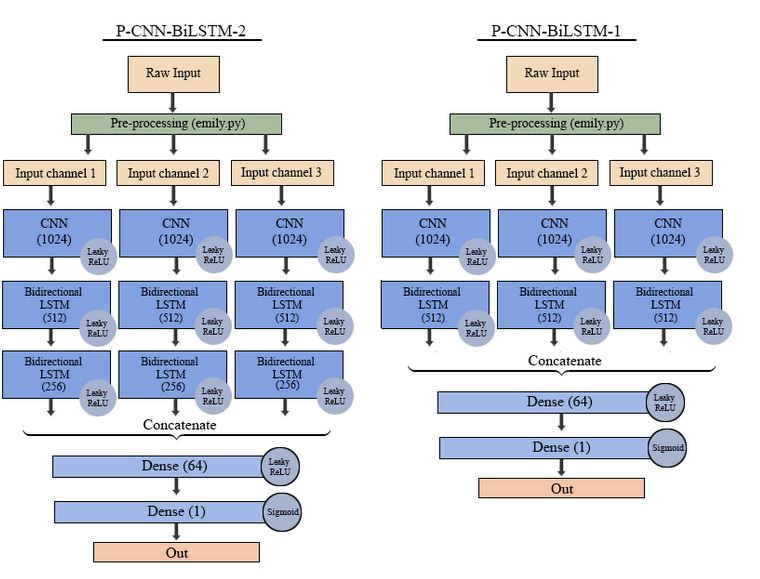

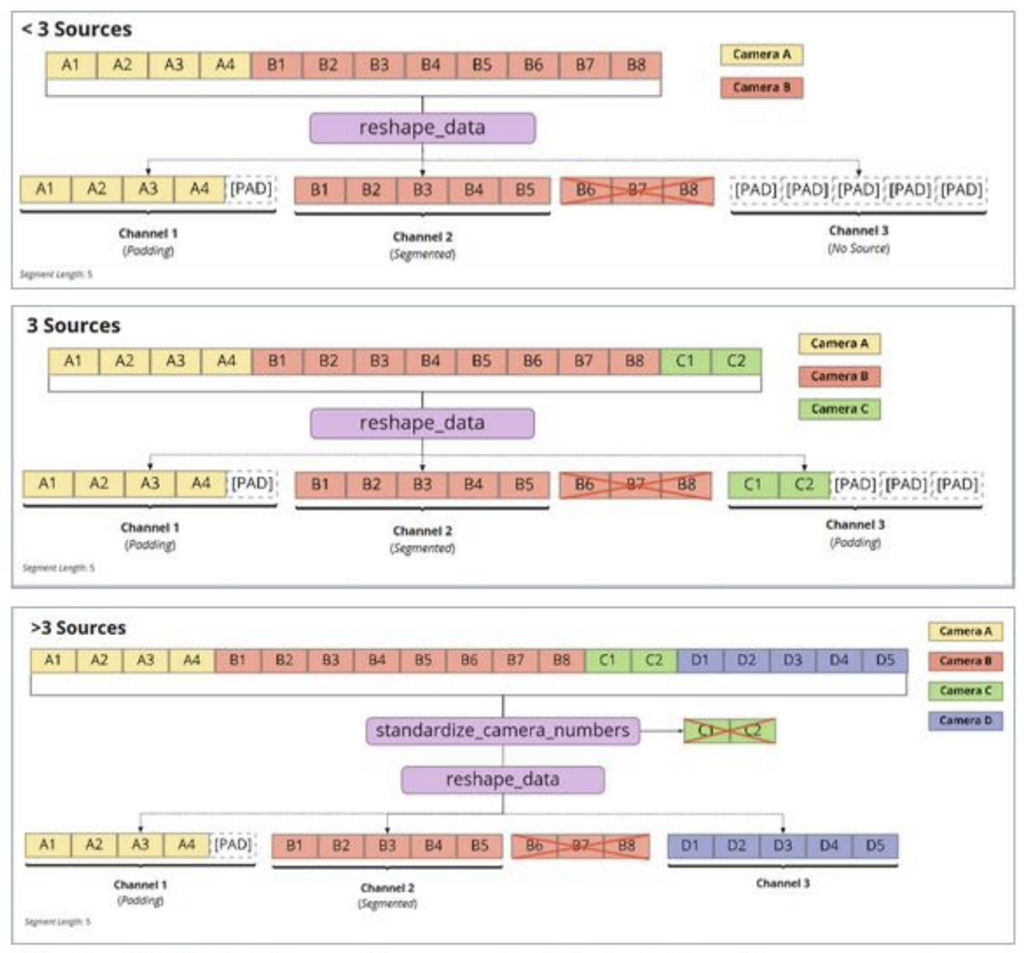

Both teams had many ideas for developing several machine learning models, and it was quite enjoyable throughout the semester to discuss and guide them towards the final solution. To start off, however, they had to do a lot of data preprocessing and data preparation. The dataset shown in Table 1 had tens of thousands of records, which were not clearly associated with distinct cameras, and had a lot of outlier records, too. In addition, depending on the predictive models to be used, the data had to be formatted into a specific structure. While some team members worked on these tasks, others conducted comprehensive research on possible ML models that can be employed. In the end, the two teams developed several deep learning and machine learning models that included the following variations: BiLSTM, BiLSTM with multiple layers, Parallel LSTM, GRU, CNN, CNN-LSTM, CNN-GRU, Transformer, XGBoost. They used GPU-accelerated frameworks like Tensorflow, PyTorch, Rapids and NVTabular on four NVIDIA Quadro RXT 6000 GPUs with 24GB memory. The motivation of the teams towards developing, training, and testing each of these models was simply amazing! They spent countless hours training, re-training, analyzing, and discussing. The class discussions that led to the findings that we share below were so enjoyable. We had many sessions where the two teams, who normally worked on the same problem but independently, ended up offering ideas to one another, leading to synergistic models that produced much improved results. See, for instance, how one team processed the multi-camera input into meaningful segments (Figure 2-bottom) that can be fed into a parallel BiLSTM network that also has convolutional layers as suggested by the other team (Figure 2-top).

Two teams working on a dataset and a problem for 13 weeks did pay off! The teams were able to improve the earlier prediction results obtained by SpaceML by a significant margin, thanks to two genuine ideas: a) a parallel LSTM model that is much better suited to the dataset provided, because the data represent multiple cameras and their associated data records, and b) a CNN-LSTM model that proved to be quite effective and also much faster, thanks to the CNN layers’ ability to extract critical features for the LSTM to take advantage of.

What Did We Learn?

As the course instructor who oversaw and mentored the entire process, I can see several takeaways on the part of students, myself as well as the overall project itself.

- Domain knowledge is a must! I see this all the time as data science is applied in a variety of settings: space, bioinformatics, retail and e-commerce, finance, etc. One must have a good understanding of the underlying notions and dynamics that lead to the datasets and behaviors that we observe. In the case of CAMS, understanding how the data was generated, why it is in the form that was provided to us, what each data attribute means in relation to the natural phenomenon that was observed, etc. were critical for the success of the models developed.

- The dirtier your hands get, the better results you have. I mean there is no free lunch. If you avoid coding, or if you do not try a different version of the model with a different twist (and many many of those twists), it is hard to get where you would like to get. It is a lot of tedious work of course, but it must be done. No pain no gain.

- There is always room for improvement. It is not only about approaching the problem from a certain (and hopefully the right) angle, but also about soliciting ideas and contributions from all team members and other stakeholders. There is no wrong idea or question; one comment or question that might be seemingly very simple or basic might lead to an interesting breakthrough, and hence the improvements or new solutions one is looking for.

- Teams work efficiently and effectively when members have complementary skills and knowledge. To me, this is another no-brainer, but it is something I observe that is overlooked a lot. Data scientists are not just statisticians or computer scientists, and they come from a variety of backgrounds. One particular background may provide an insight or approach that might prove quite valuable for the task at hand. For instance, a team member with Physics background may provide a better domain knowledge and understanding of the dataset and offer a more suitable approach, such as a mathematical model from the physics domain, in modeling the behavior of the heavenly bodies. Or in a different context, say retailing or e-commerce, a team member with a psychology background may offer a new perspective or explanation on particular consumer behavior. These approaches and perspectives might simply result in the much-needed leap in the project.

In Conclusion…

In the end, this was an amazing learning experience for our students on a very exciting topic (space and meteors!), and it led to good results with reusable models that employed creative ideas. For someone like me who is doing this year after year, I can tell that every project offers its unique challenges that we all together (students, company advisors, myself) need to navigate through. Data Science is a fast-developing field after all with learning opportunities for all, even the much-skilled data scientist or programmer, or a professor of data science. What we experienced over the course of 13 weeks in the CAMS project was a collective learning experience, and a certainly working model that involves industry folks looking for talent to address their big data challenges and students looking for real-life experience dealing with data and exciting yet challenging problems.

Looking forward to launching the next offering of our Practical Data Science course with another project in partnership with SpaceML for Fall 2022, with the help of more industry partners, current and new…

*Team Radiant: Sara Haman, Vivienne Prince, Reilly Kalani Stanton and Team Brilliant: Dimitri Angelov, Timothy McCormack, Amanda Norton, Steven Spielman

References

- Github repos by Team Radiant and Team Brilliant: available upon request; please contact the author

- LinkedIn pages: Sara Haman, Vivienne Prince, Reilly Kalani Stanton, Dimitri Angelov, Timothy McCormack, Amanda Norton, Steven Spielman

This article was originally published on medium.com